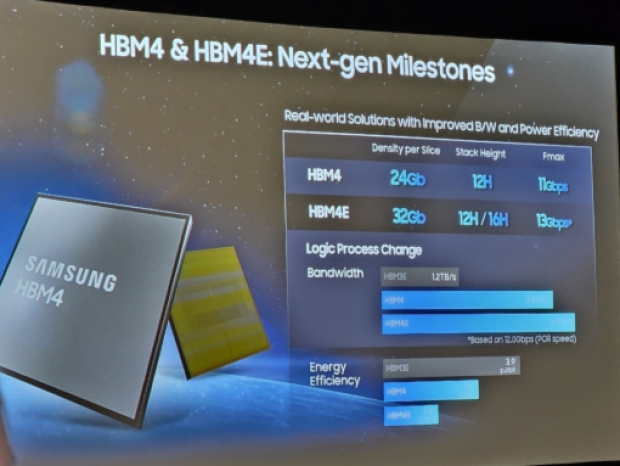

At the Open Compute Project Global Summit, the company paraded HBM4 and HBM4E roadmaps. The headline for HBM4E are speeds up to 13 Gbps per stack and a quoted 3.25 TB/s of bandwidth, which would plant it among the quickest memory stacks on paper.

Power efficiency is pitched as almost double HBM3E, while plain HBM4 is said to hit 11 Gbps pin speed, outpacing what standards bodies have floated so far. Local reports say Nvidia pushed hard for faster HBM4 to feed its Rubin parts, and Samsung was first to flash the badge.

Samsung fell behind its competitors in HBM3E, has been targeting higher bandwidth than its competitors since the early stages of HBM4 development. While the 'speed battle' it waged in HBM4 is on the verge of success, it appears that its strategy is to move quickly in the next generation as well to turn the tables.

There is a pricing play too. With logic on 4nm inside HBM4, Samsung’s foundry arm can keep more of the stack in-house and fiddle margins. If it keeps its price down it could make life awkward for SK hynix and Micron.

The firm talked up efficiency gains for HBM4E and hinted that supply terms to big GPU buyers could be structured to win share fast, even if it means skinny profits early on.

Timelines point to early 2026 for HBM4E to show up alongside HBM4 mass production, which is aggressive but matches the accelerator crowd’s appetite for bandwidth at any cost.