MSI is showcasing a four-node scalable unit built on NVIDIA’s Enterprise Reference Architecture. Each node has eight Nvidia H200 NVL GPUs and uses NVIDIA Spectrum-X networking for scalable AI workloads. This setup can scale up to 32 servers, supporting a total of 256 H200 NVL GPUs.

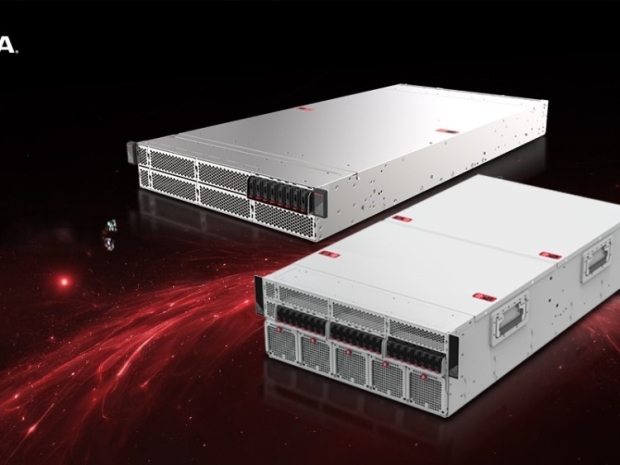

The CG480-S5063 4U AI server features dual Intel Xeon 6 processors, eight FHFL dual-width GPU slots supporting up to 600W, 32 DDR5 DIMM slots, and twenty PCIe 5.0 E1.S NVMe bays. This configuration is tailored for large language models, deep learning training, and fine-tuning.

For more compact needs, the CG290-S3063 2U AI server offers a single-socket Intel Xeon 6 processor, four FHFL dual-width GPU slots, 16 DDR5 DIMM slots, and multiple NVMe storage options, making it suitable for a range of AI workloads from small-scale inferencing to large-scale training.

MSI unveiled the AI Station CT60-S8060, a desktop solution based on NVIDIA’s DGX Station architecture. It features the NVIDIA GB300 Grace Blackwell Ultra Desktop Superchip and up to 784GB of coherent memory, delivering data center-level performance for AI development tasks.

These offerings align with Nvidia's recent initiatives, including the NVLink Fusion platform, which allows integration of CPUs from partners like Fujitsu and Qualcomm with Nvidia Blackwell GPUs. This move aims to meet the demand for specialised AI infrastructures.

MSI General Manager of Enterprise Platform Solutions Danny Hsu said: “With the explosive growth of generative AI and increasingly diverse workloads, traditional servers can no longer keep pace. MSI’s AI solutions, built on the NVIDIA MGX and Nvidia DGX Station reference architectures, deliver the scalability, flexibility, and performance enterprises need to future-proof their infrastructure and accelerate their AI innovation.”