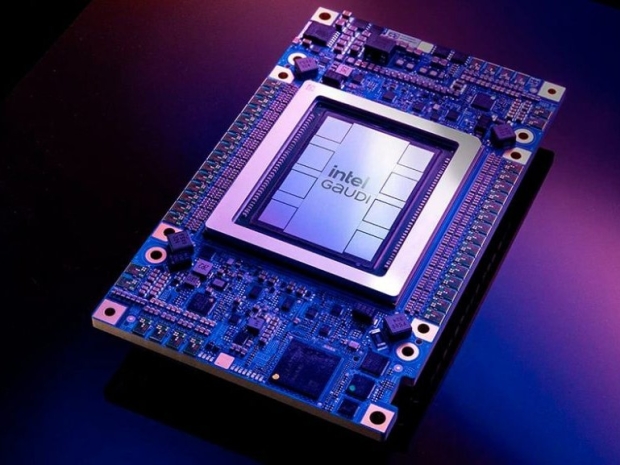

At the OCP Global Summit, Intel showed off a hybrid AI rack system that blends its Gaudi 3 silicon with Nvidia’s latest Blackwell gear. The setup pairs Intel’s Xeon CPUs and AI accelerators with Nvidia’s ConnectX-7 networking and Broadcom’s monster 51.2 Tb/s Tomahawk 5 switches to form a rack-scale system meant to chew through inference workloads with both cost efficiency and raw performance.

In this odd-couple setup, Gaudi 3 handles the ‘decode’ portion of the inference, while the B200s are left to flex their matrix muscle in the more compute-heavy ‘prefill’ stage. The result is 1.7 times better prefill performance than using a B200-only configuration on compact, dense models, though no one outside Intel’s marketing team has verified that claim.

Each rack includes 16 compute trays, and each tray crams in two Xeon CPUs, four Gaudi 3 accelerators, four NICs, one Nvidia BlueField-3 DPU and a whole lot of Ethernet. It’s a clever move if your Gaudi platform isn’t exactly flying off shelves solo. This way, Intel gets to shift its surplus silicon while riding Nvidia’s AI wave.

The Gaudi architecture was pitched as a cheaper alternative in a market Nvidia dominates outright. But its software stack remains half-baked, and insiders already say the platform is heading for obsolescence in the near future.

This is Chipzilla trying to cash in while it still can. Nvidia, for its part, gets to show off that its networking stack can tie the whole lot together. Everyone wins, except perhaps the customers who’ll need to stitch together two very different AI software ecosystems just to make this Frankenstein rack run.